Trying out Rancher

trying-outThis time it’s a bit different: this post has very little code and a lot of screenshots as I was primarily working with a GUI.

Reading about (and working with) K3s is almost impossible to not hear about Rancher - the company behind K3s. But what had me slightly confused is their primary product: Rancher (the software).

The web page says

Managed Kubernetes Cluster Operations

but that doesn’t really tell me much. Neither do their presentations. So what better way to get a feel for it than taking it for a spin :) (there is some enterprise offerings but the base product is free).

Getting started

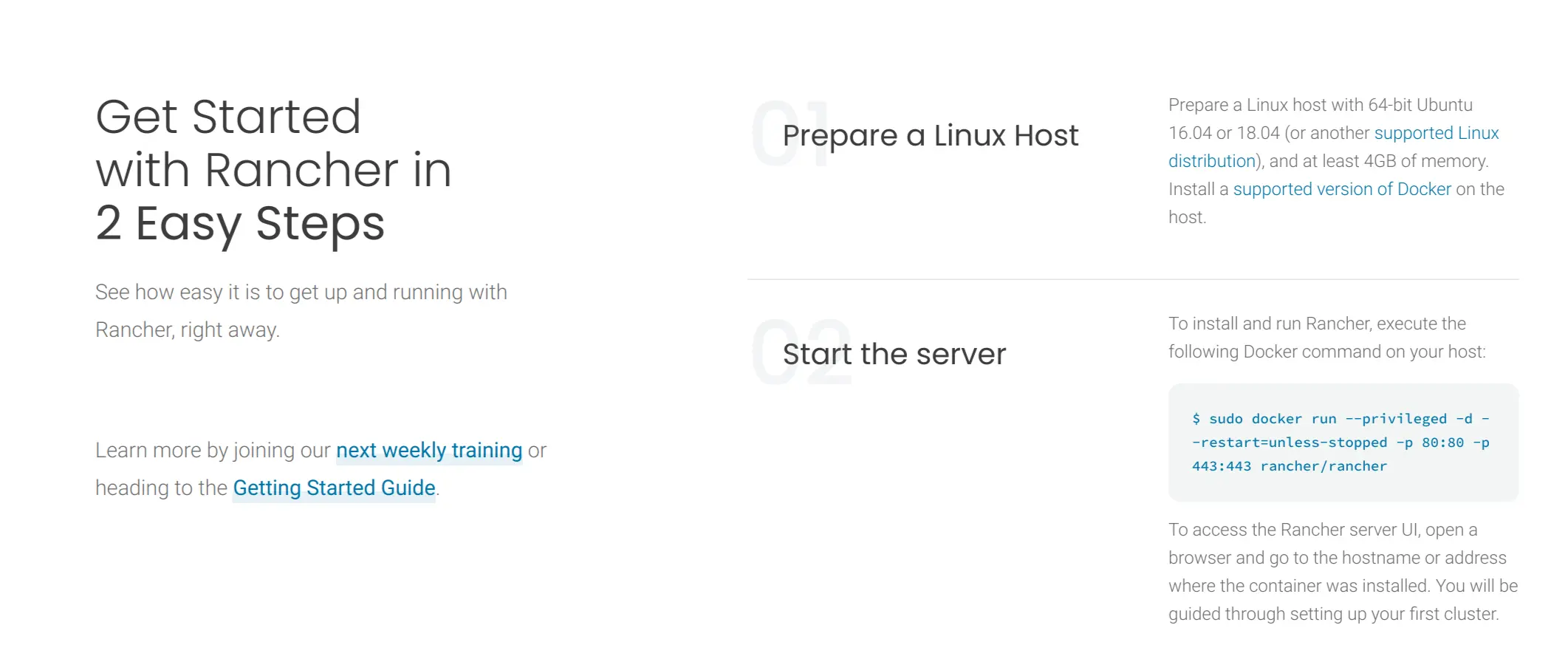

After clicking the fetching Getting Started button I’m presented with these rather short instructions:

Ok, prerequisites first. I spun up a new VM on Digital Ocean and selected Ubuntu 20.04 (which is on the list of supported distros and is not ancient), 8gb of ram and 4 vCPUs - completely guessing here - no idea what I actually need I picked a “smallish VM that should still handle any reasonable application”.

Second step is getting docker on my machine, this is where picking Ubuntu comes in handy:

| |

Just a few minutes later I can actually proceed onto actually running rancher via docker:

| |

And just like that we’re up and listening on port 80!

| |

First impressions

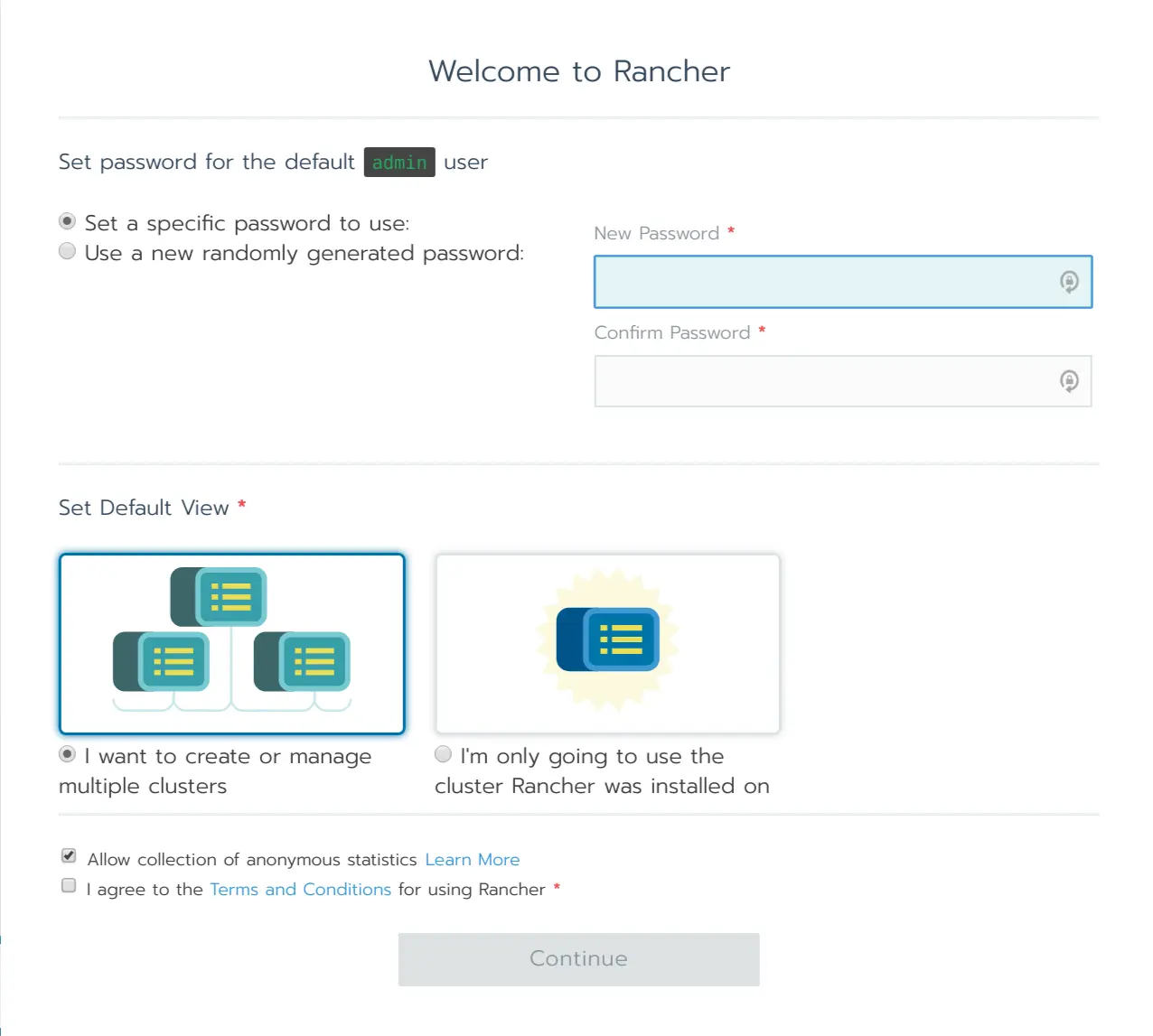

I navigate to port 80 of my new VM from my laptop and I’m greeted with a first time setup. Nice :)

Let’s go with multiple clusters, to see what this is all about.

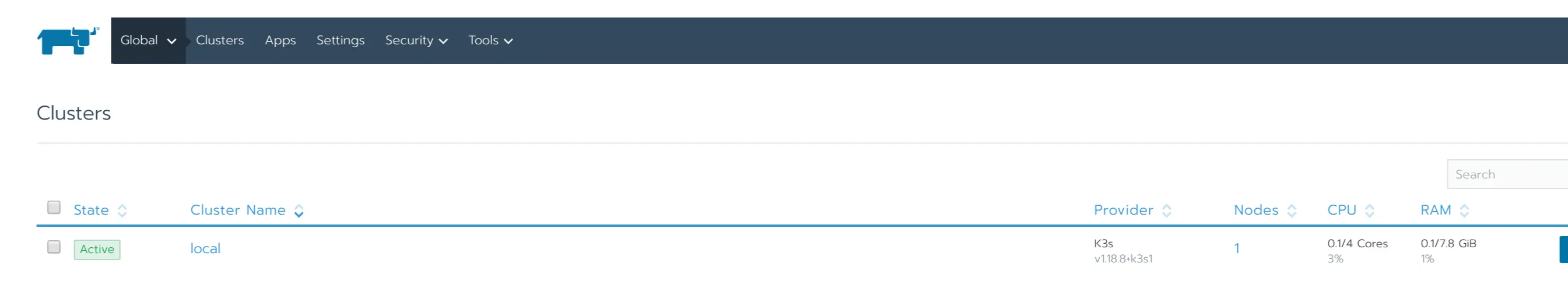

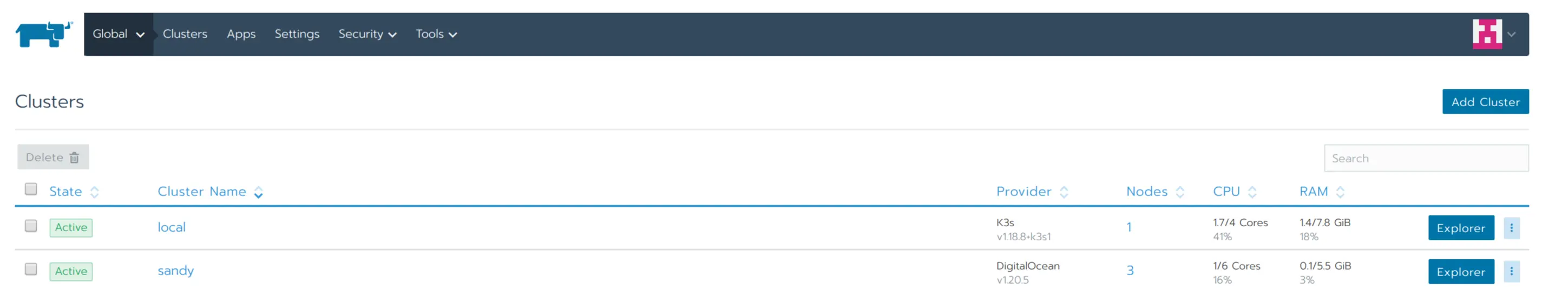

Huh. Apparently we got a local K3s cluster as well. I’m guessing “local” means it’s running on the same VM (there is no real other option anyway).

I’m wondering if you can also install rancher on an existing cluster. Quick search suggests the answer is yes.

What happens if I click on “local”?

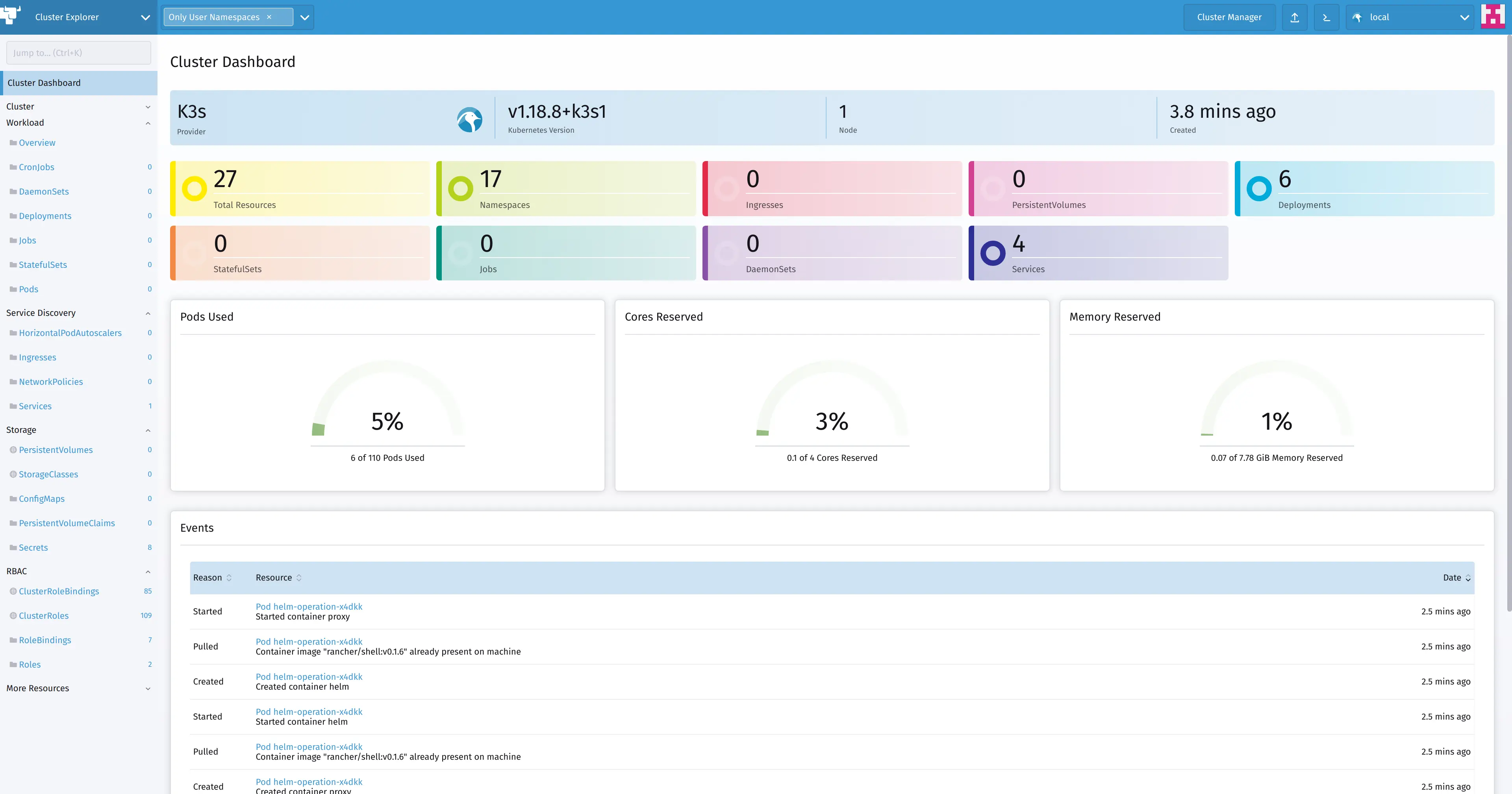

Vaguely reminiscent of K8s Web UI. Looks useful.

Creating clusters

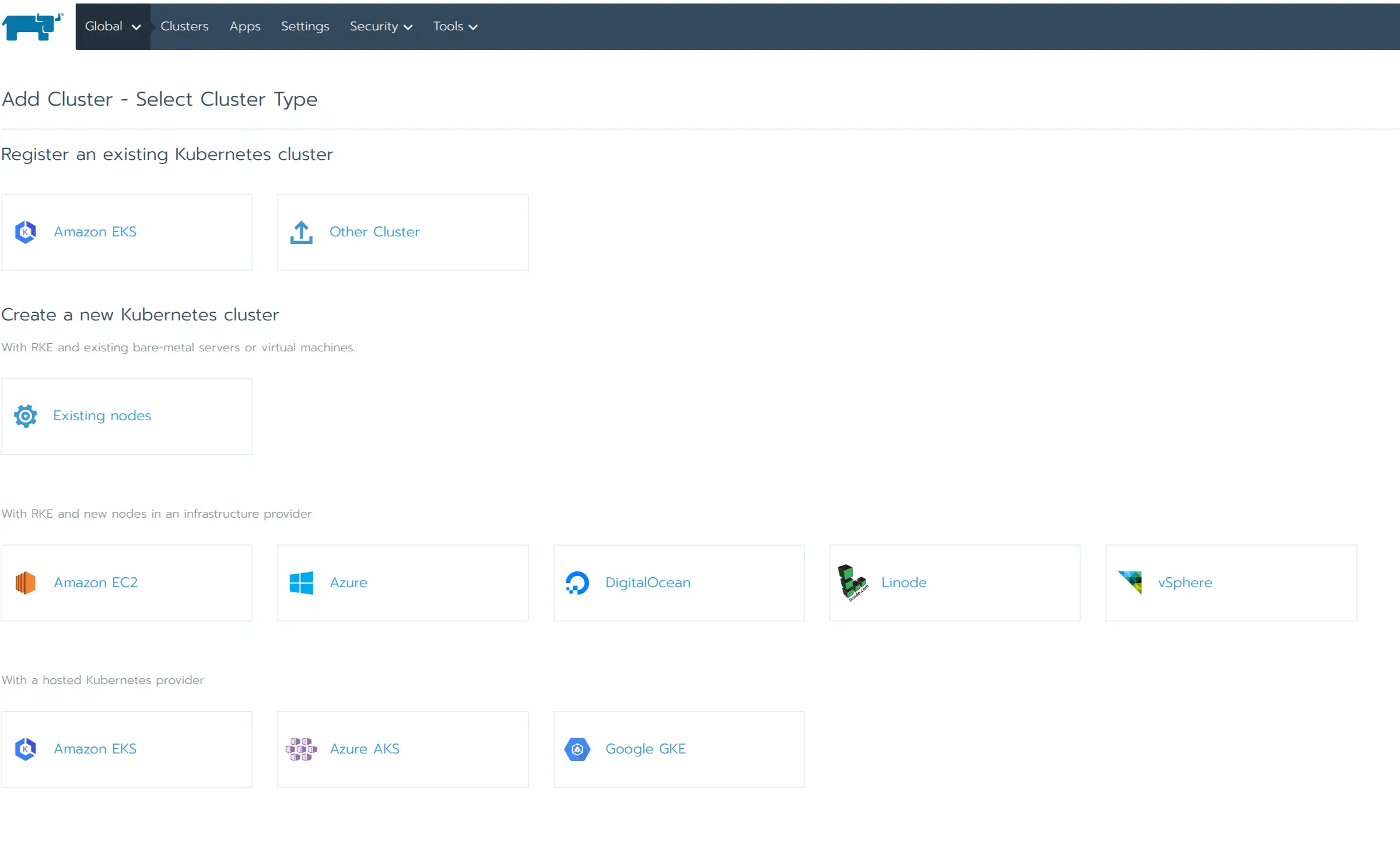

But I’m not interested in a dashboard for a K3s instance - I want to try setting up some clusters :D Let’s try clicking add cluster.

That’s quite a list of providers already. Quite intriguing one is “Existing nodes”. Also interesting to me is the ability to connect managed clusters. You really do get one central management tool for all your clusters. Huh you can even automatically provision VMs on vSphere. But I’m going with DigitalOcean today as this is where I’m doing my experiment. A happy accident really - haven’t checked what is supported upfront.

But I did do some digging at this point and apparently you can install extra (even 3rd party) providers to extend this. E.g. I’ve found this tutorial for setting up a cluster on Hetzner - which is another provider I sometimes use for my projects. Liking this extensibility a lot.

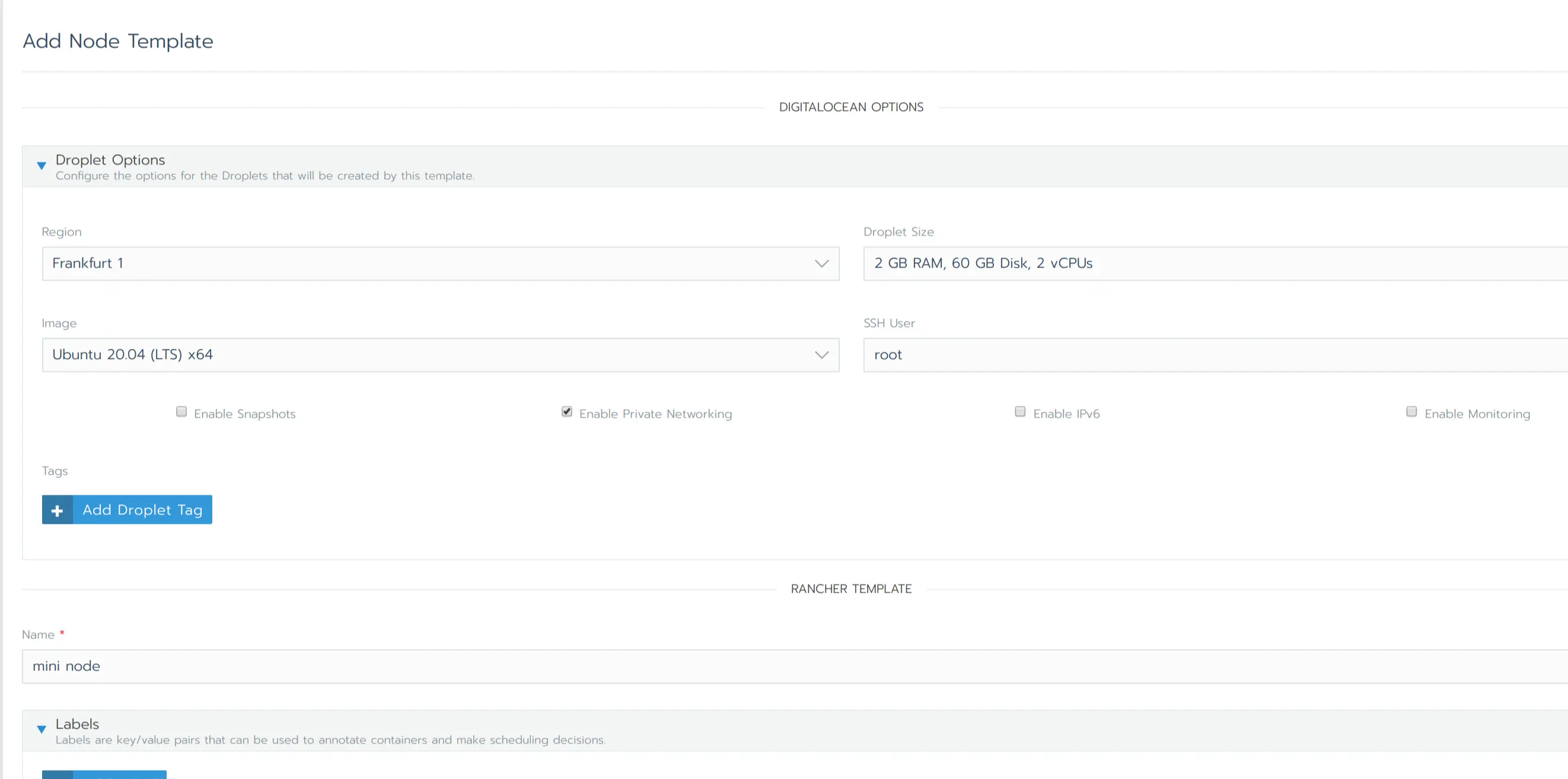

First I need to configure a template for my nodes (since this is the first time and I don’t have any?). I’m going with something small as I don’t have plans for any substantial workloads on this cluster.

Note: I was also prompted to configure DO integration at this point by providing an API token - no magic integration but super straightforward.

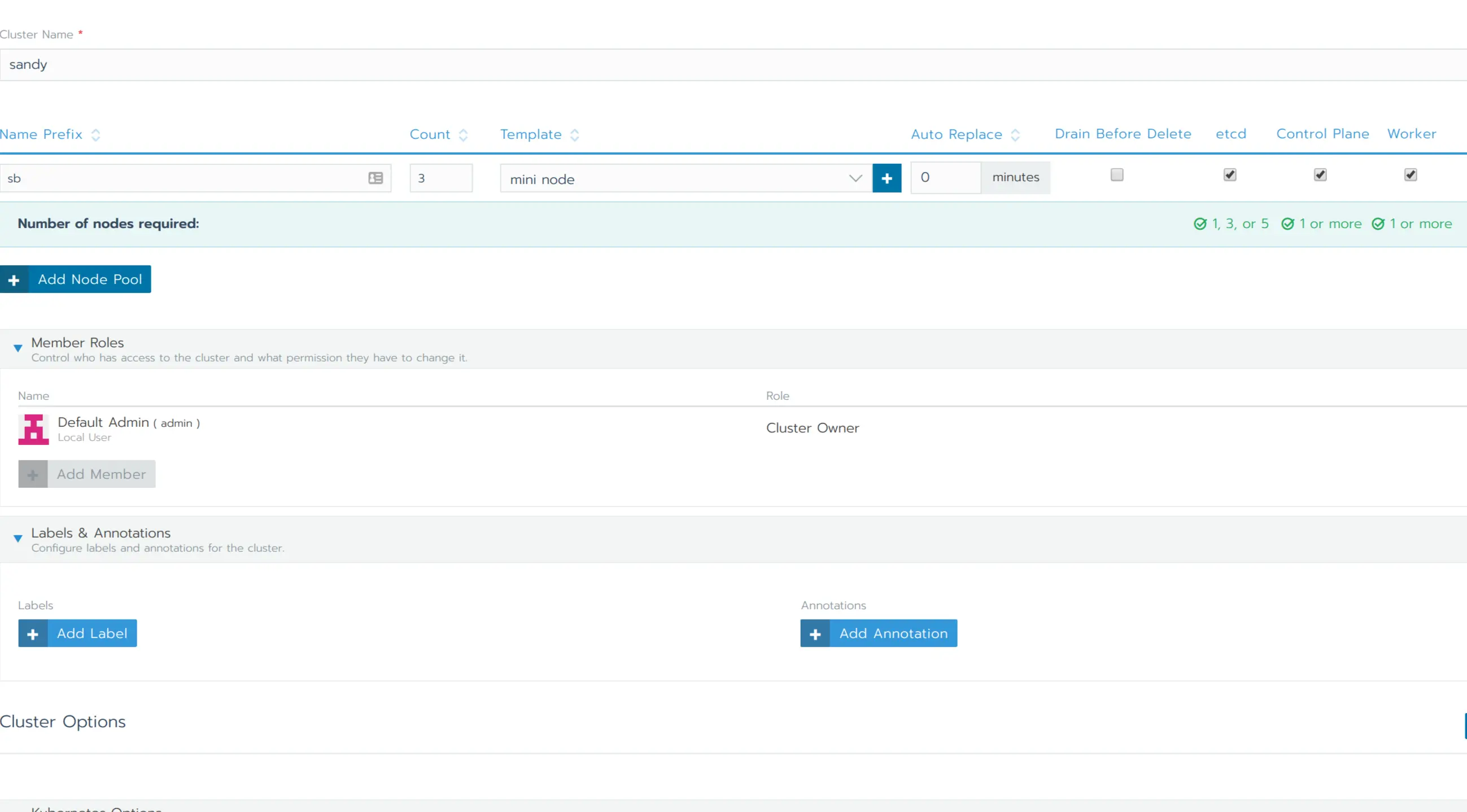

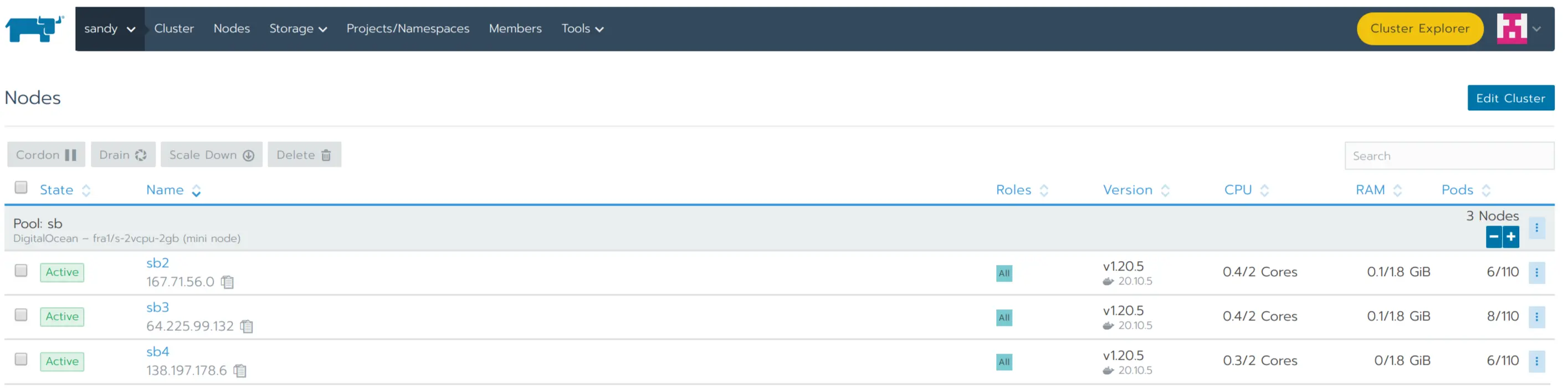

Time to setup my node pools now. If I’m reading this right I’m configuring how many VMs of what template to run and what services to run on them. There’s even validation (green checkmarks) that I have a setup that yields a functional cluster. I won’t bother with other options, let’s just take defaults out for a spin.

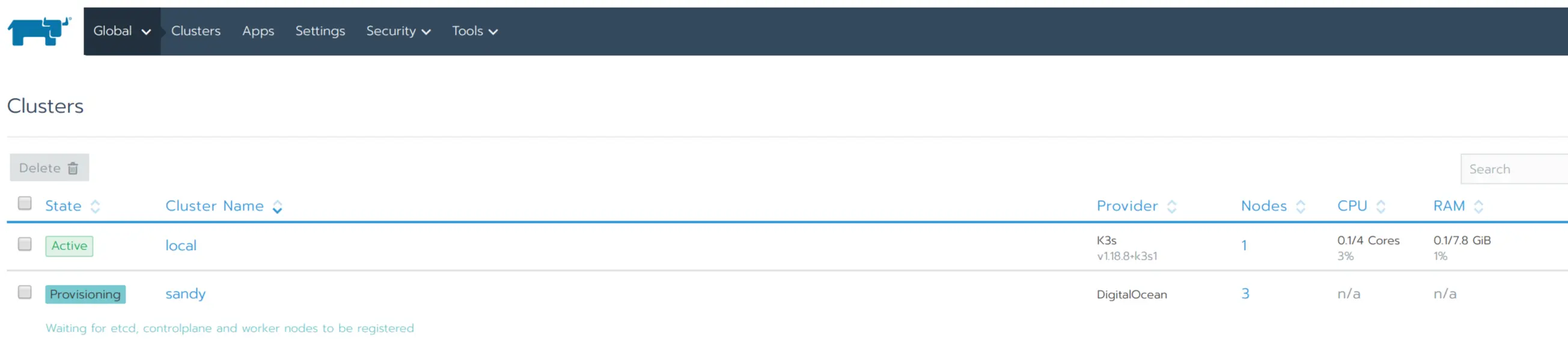

And now we wait….

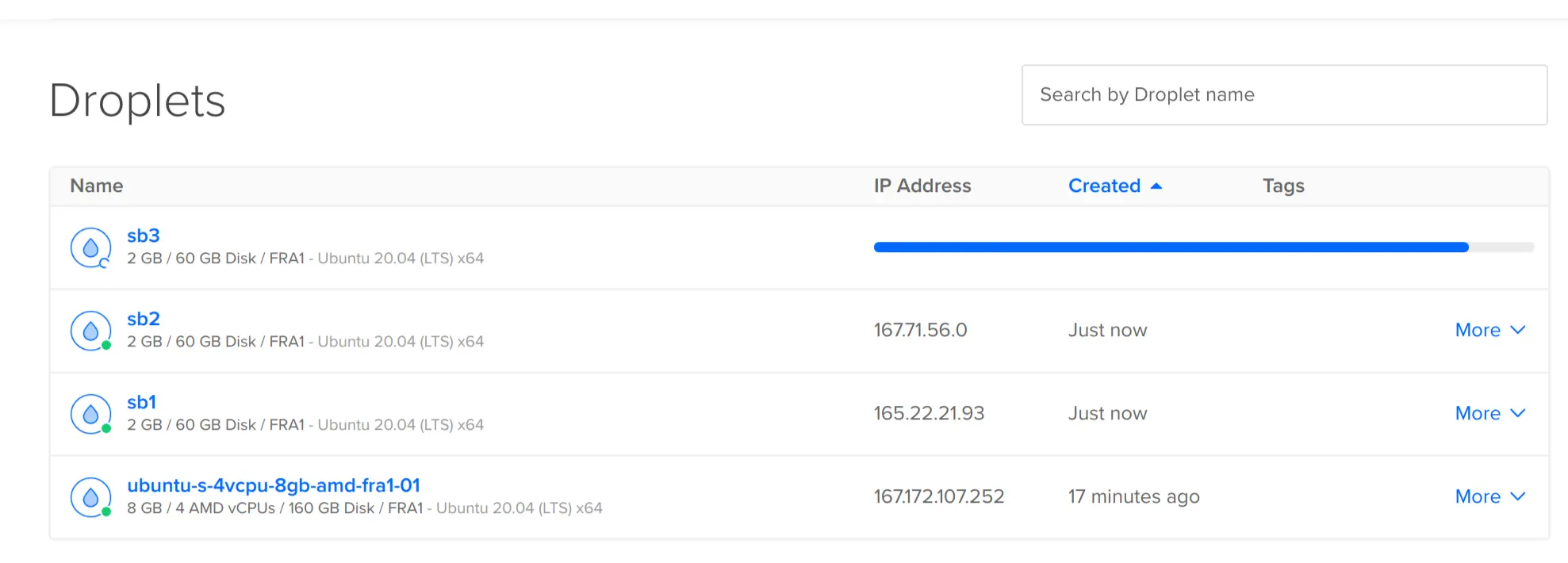

Meanwhile in DO console I can see droplets coming up

After about 10min the cluster reports as “active”. Great success!

Clicking that I get an overview of my new nodes

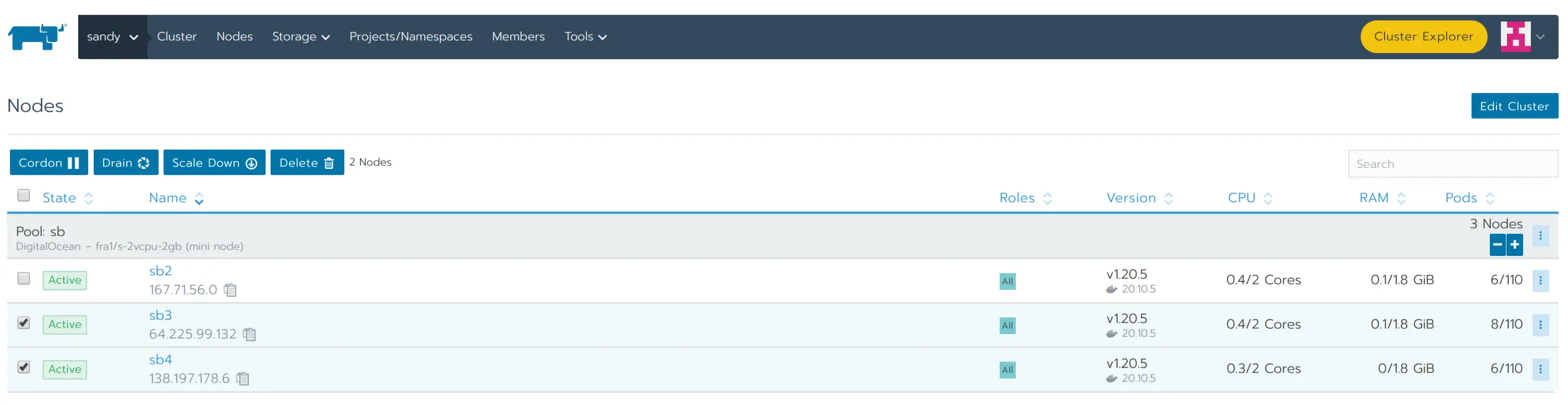

And some management too

Doesn’t seem much…but stop to think about it for a moment. This abstracts over the underlying compute provider which is great if you’re running multiple clusters on a mix (e.g. AWS + on prem).

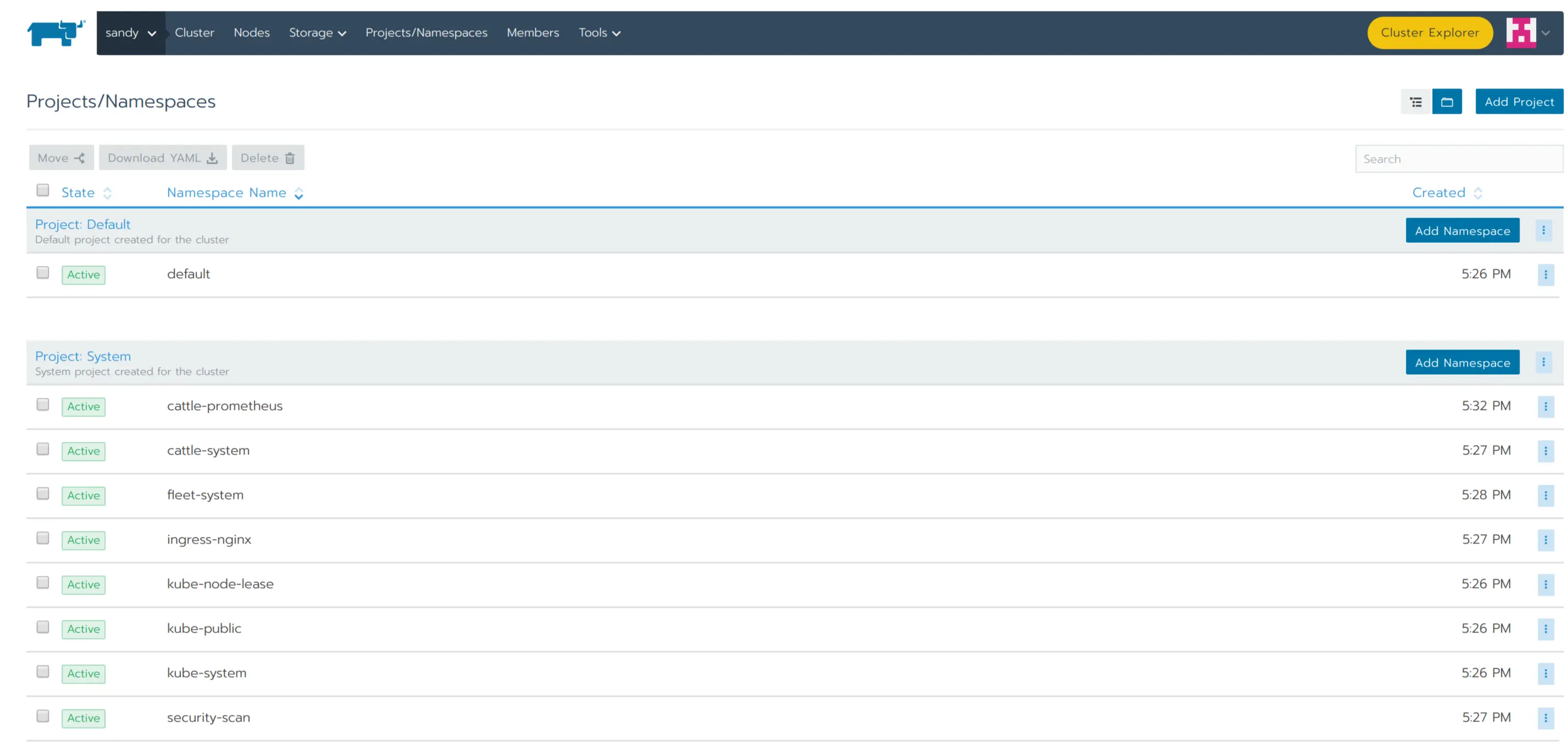

I can also pick “namespaces” from the menu and get an overview of k8s namespaces on my cluster.

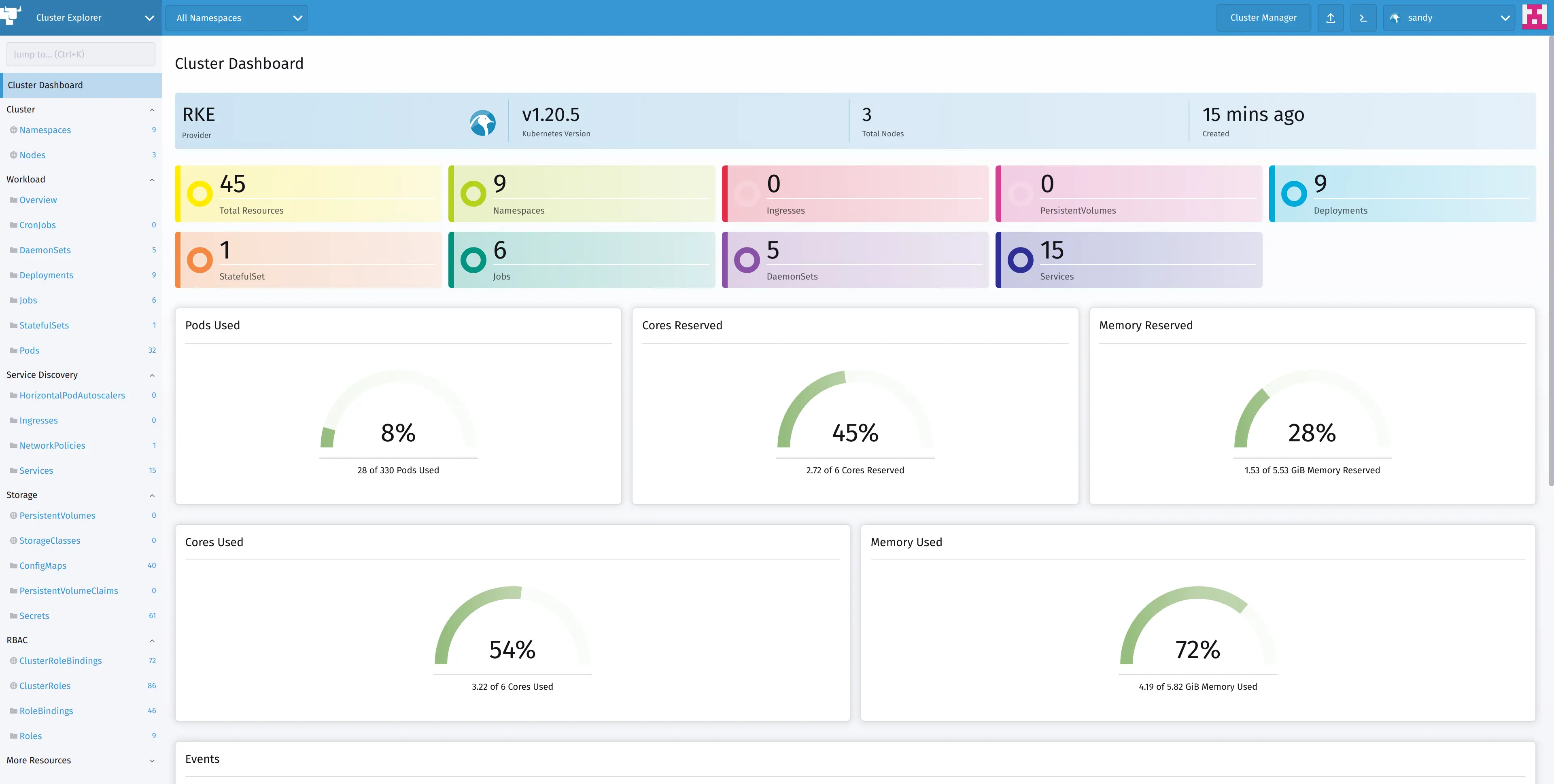

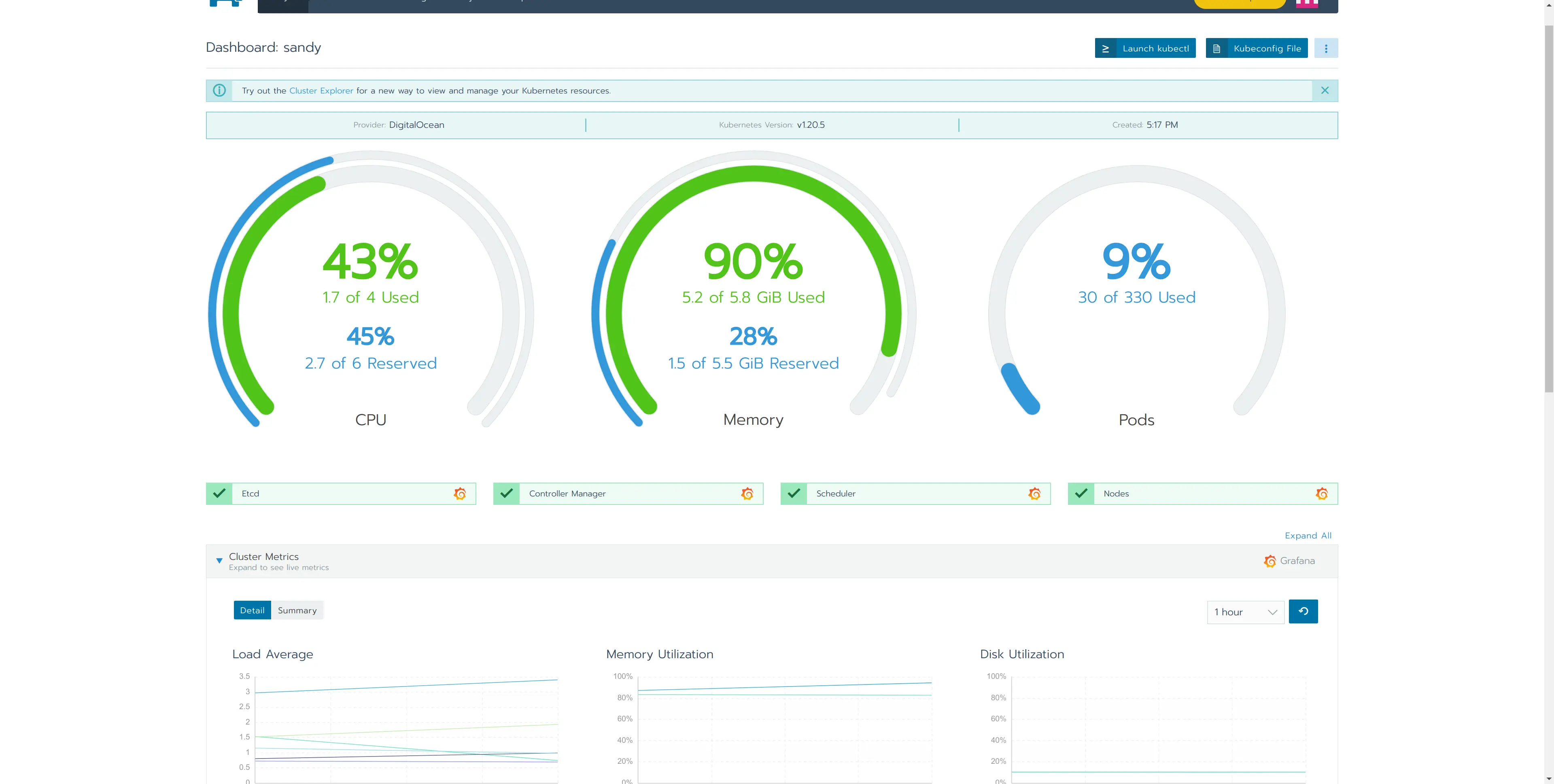

And yes - there is still the dashboard.

One interesting difference pops out: we’re not running K3s anymore but RKE - Rancher Kubernetes Engine.

RKE is a CNCF-certified Kubernetes distribution that runs entirely within Docker containers. It solves the common frustration of installation complexity with Kubernetes by removing most host dependencies and presenting a stable path for deployment, upgrades, and rollbacks.

The key point here is that we got what is basically a managed cluster (k8s as a service) using only a compute provider (yes I know DO also offers managed k8s but that a different beast altogether).

Features

With a few more clicks I got a metrics stack installed in the cluster and now have more detailed stats.

And a configured grafana instance too!

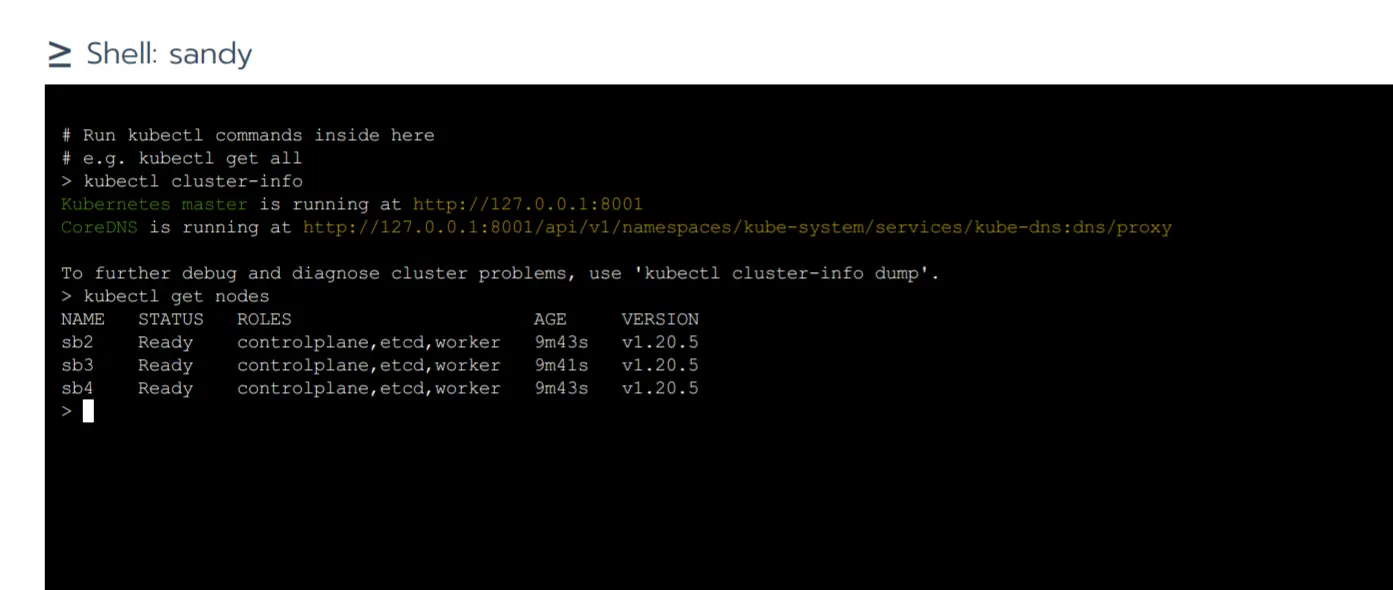

I can get a kubeconfig file directly from the UI and use it to connect to my new cluster like any other cluster

| |

But if I cannot be bothered and just want to poke around a bit there is even a convenient web-based shell

Apps

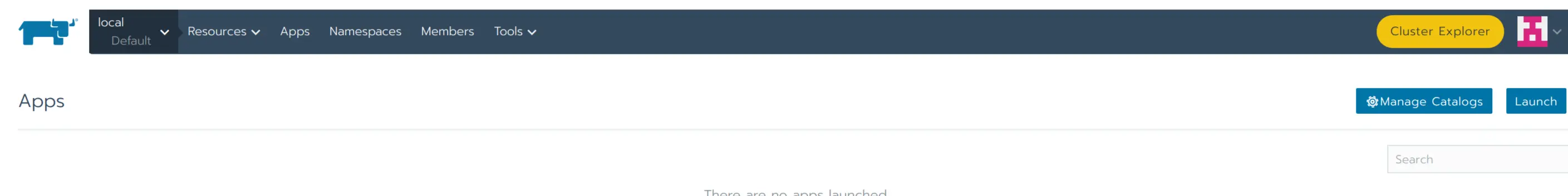

Moving on the last element I see in the UI - Apps

Looks like some form of prepackaged software.

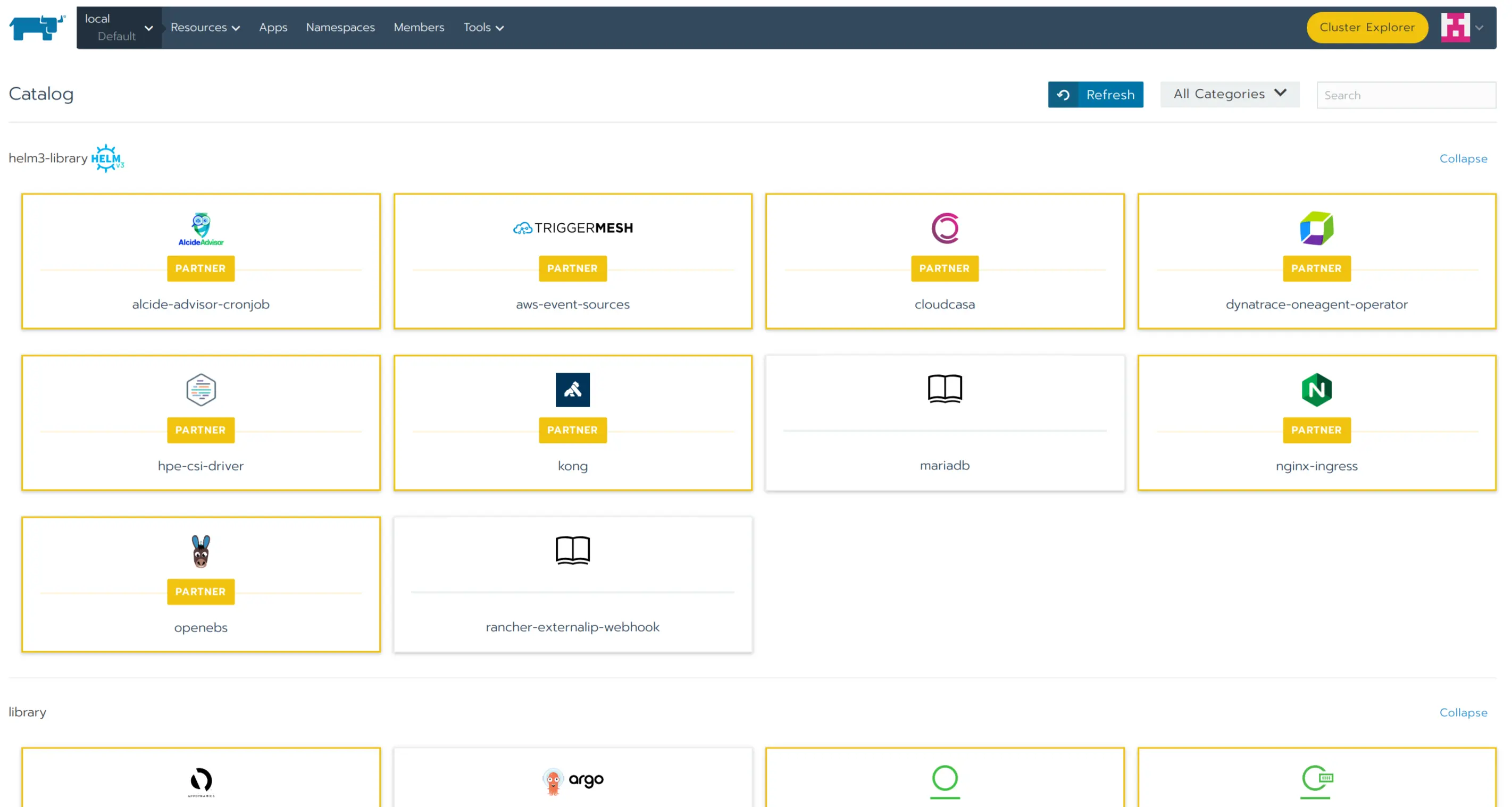

Digging deeper confirms this is in fact a UI for helm charts.

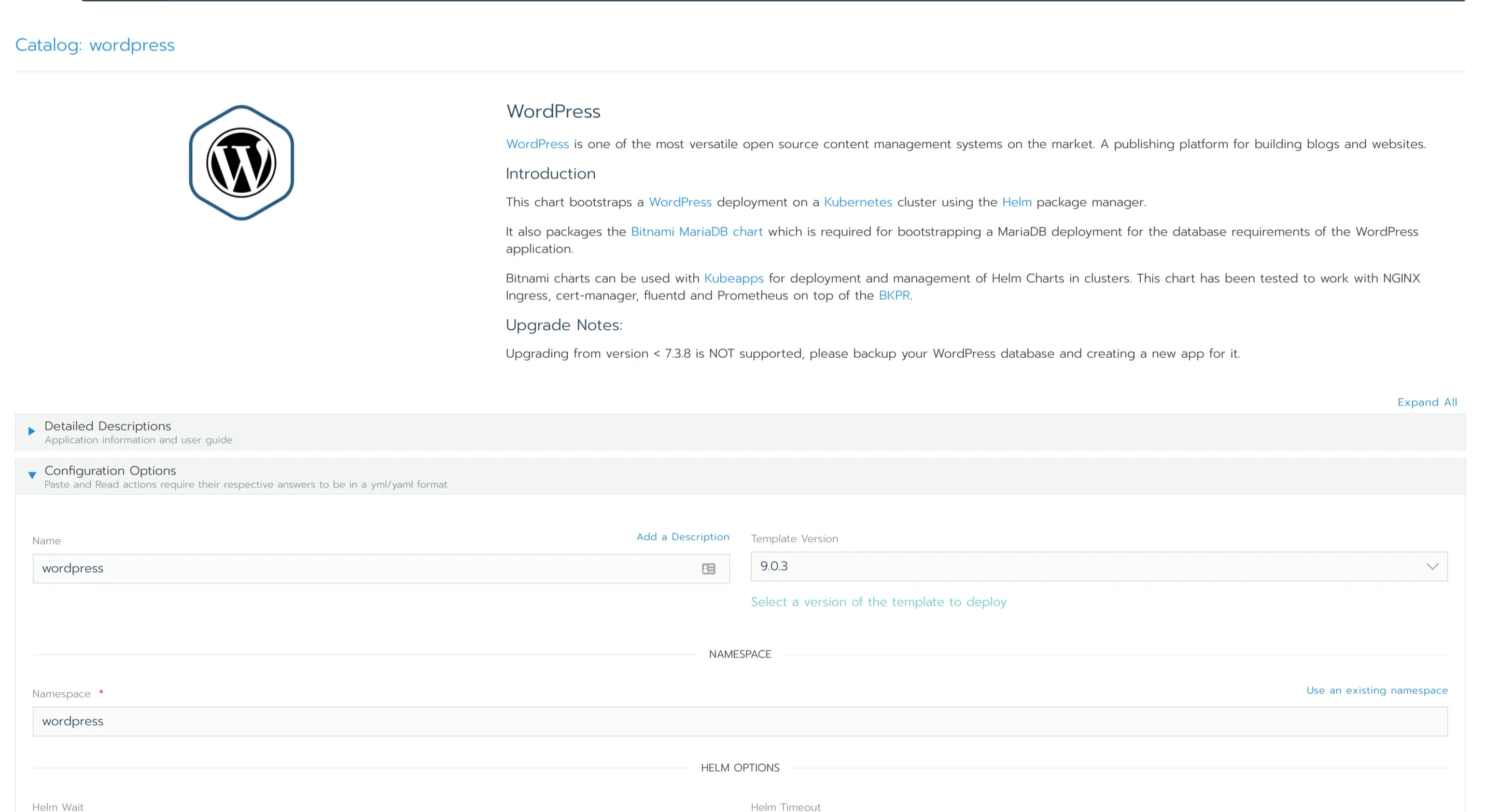

Let’s configure a Wordpress instance then. This should be a nice test if our cluster is in fact fully functional and provides all we could possibly want

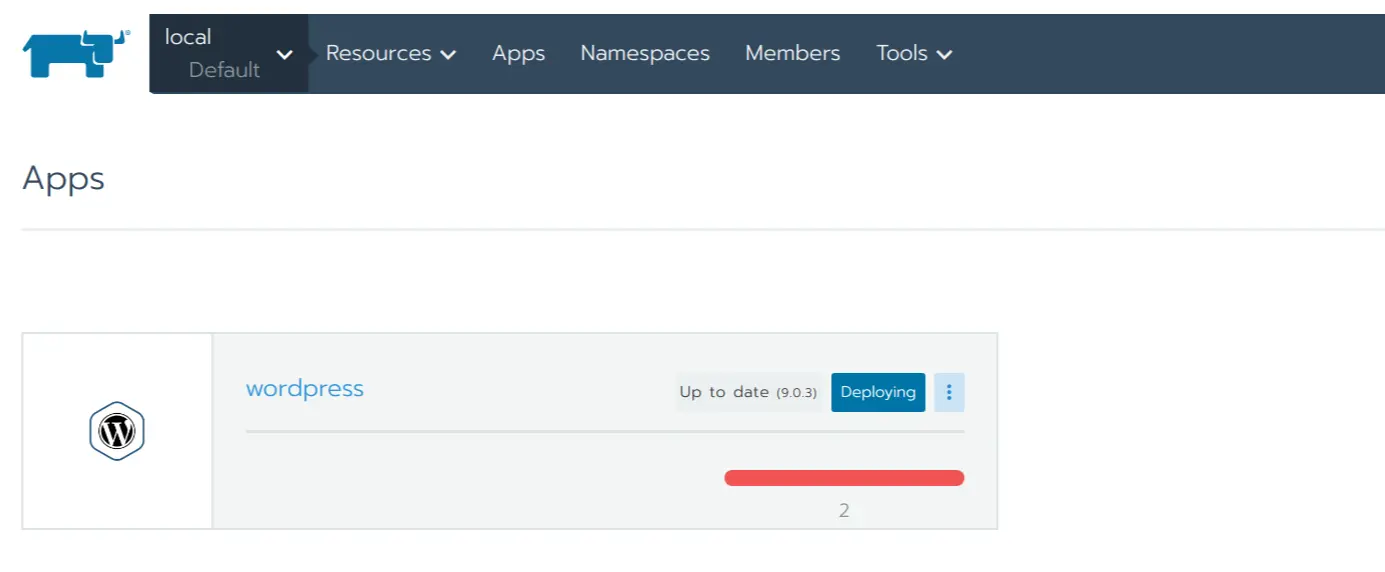

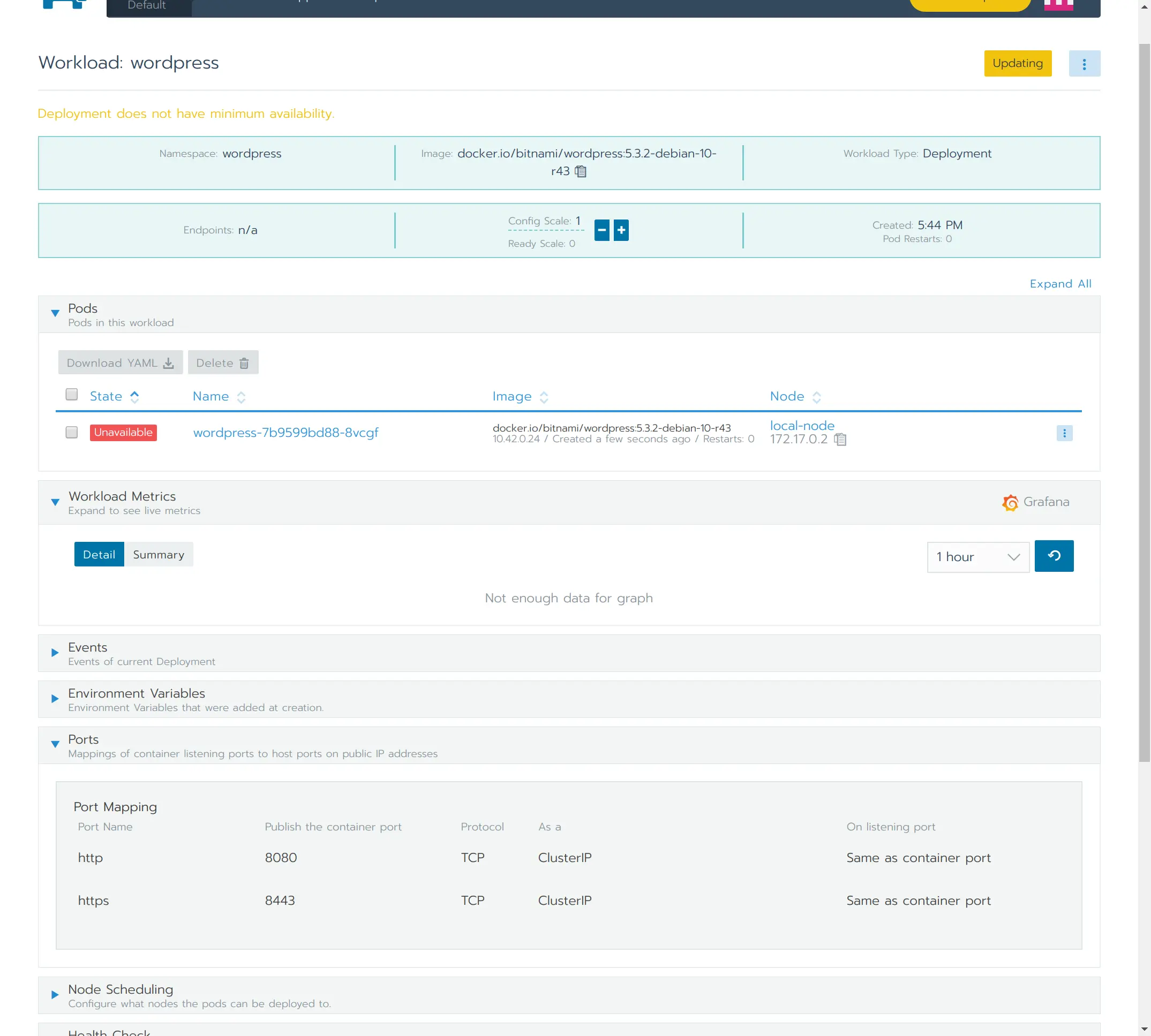

Takes a moment to deploy the chart. I can now zoom in and see the actual deployment in action

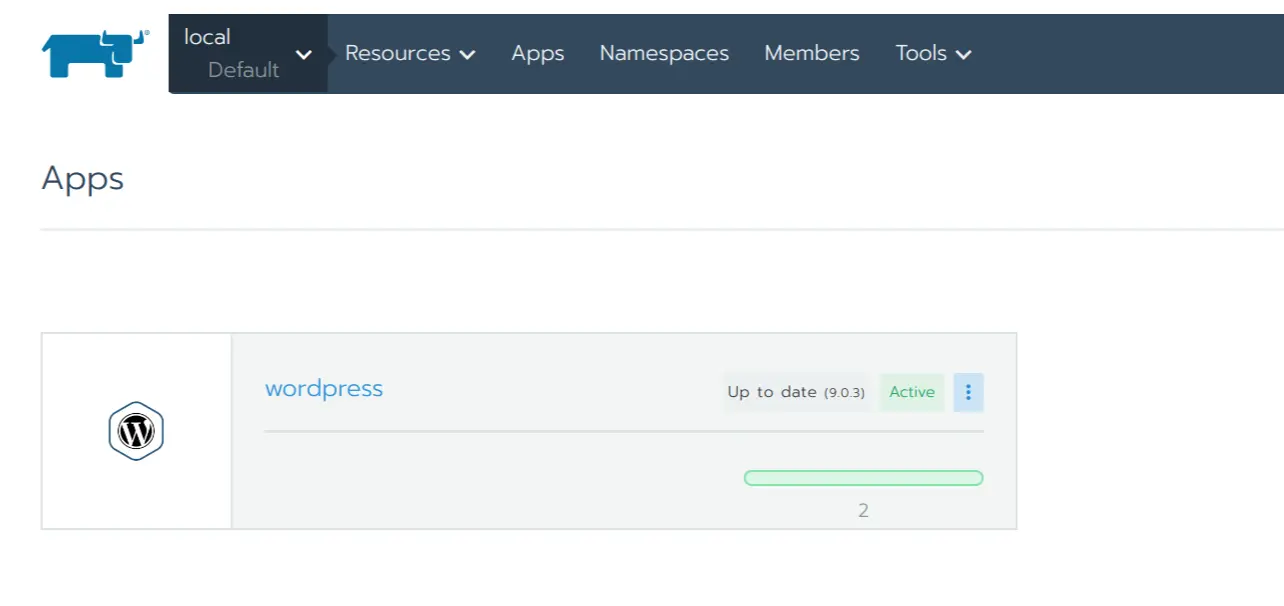

Few more minutes and we’re green.

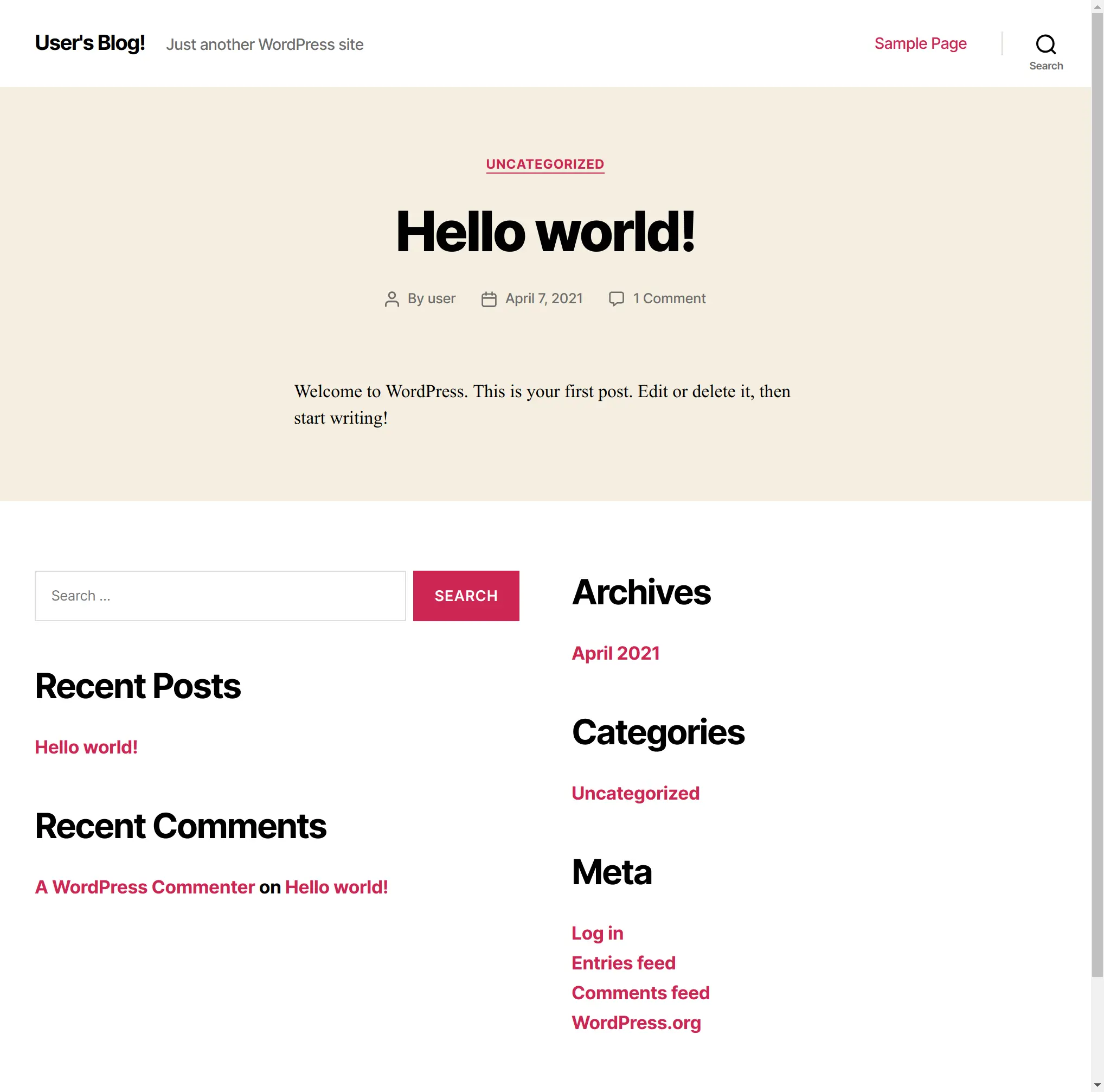

A quick /etc/hosts edit later I’m accessing my WP instance. I’m pointing my

browser at one of the nodes where I’m hitting an ingress controller that routes

to an internal service based on the virtual host (hence /etc/hosts edit) that

routes to the actual pod running somewhere in the cluster.

Removing a cluster

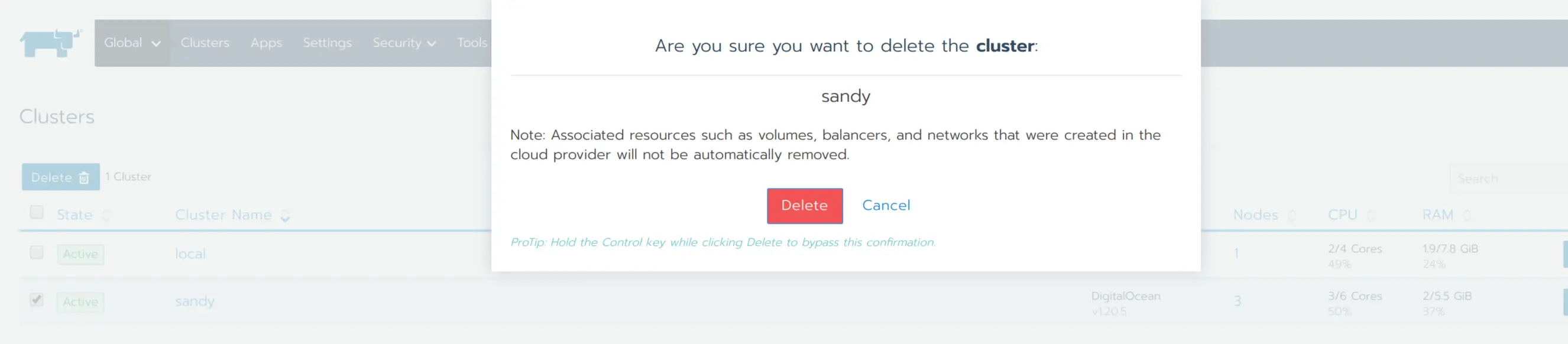

That was enough fun. Let’s tear the cluster down to see if it cleans up after itself.

Entered a “Removing” state

and few moments later is gone. So are all the traces from my DO dashboard.

Closing thoughts

I think I get “managed kuberenetes cluster operations” now. Provisioning, monitoring, day 2 ops, running some common workloads. Seems like the primary use case is managing multiple clusters but it’s also an interesting alternative for providing “managed” clusters in places where you don’t have this option.

Last modified on 2021-04-14

Previous Trying out Okteto CloudNext Trying out Microk8s